Free Idea: Train on Changes, Not on Code

There’s no way, augmented or not, for me to follow up on all the ideas I’m generating as I explore augmented coding. Here’s one for free that I may or may not try myself. I really really really want it to work, though, and I’ll pay to use it if you make good progress.

Here’s the premise: LLMs are trained on snapshots of code (as I understand the current practice—please correct me if I’m wrong). They look at a large code base at a point in time & see, for example, lots of stuff that’s there for “backwards compatibility”. So they generate or retain a lot of code for “backwards compatibility” whether or not there is a backwards to be compatible with.

This applies to more complex design patterns as well. I’ve had genies insert factories & registries & interfaces where they served absolutely no purpose but to obscure the structure of the code. I assume the model saw lots of code bases that used these patterns & decided they were good. And maybe they were good in the code the genie trained on, but not on my little projects, not yet anyway.

For the next month I’m making it easier for aspiring augmented coders to join our community.

Ch-ch-ch-ch-changes

The first problem with training on big snapshots of code bases is this bias towards big-codebase techniques. There’s nothing in the training, that I can see anyway, about when the technique crossed from “annoying complexity” to “essential simplification”. Nothing about why.

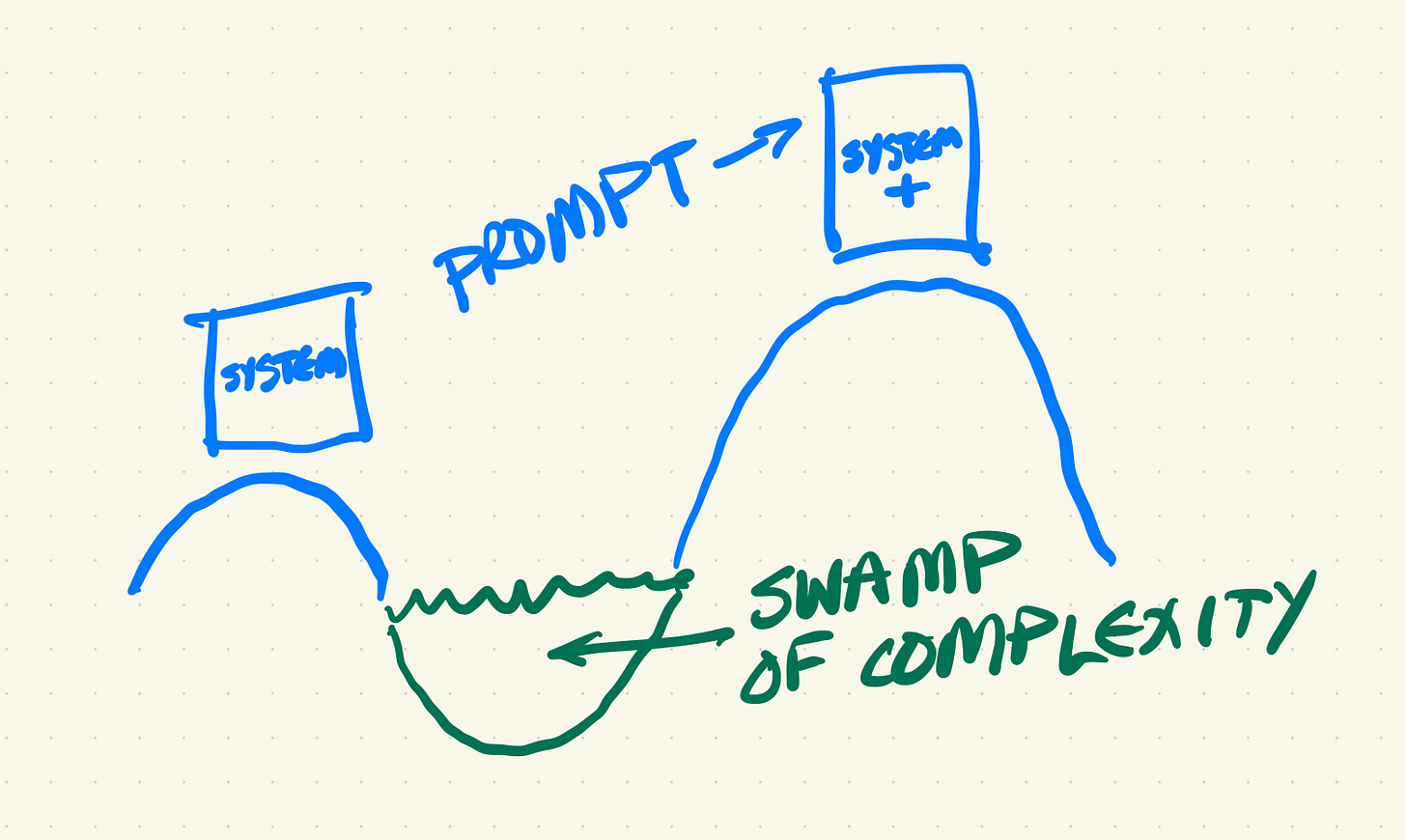

The second problem of training on big snapshots of code bases is that the genie becomes overconfident (yes, I know I’m anthropomorphizing here but heck I’m pairing with a machine at 32,000 feet so I’m cutting myself some slack). The genie has a system, sees a prompt, & wants to make changes until it gets to the new system.

The bigger & more complex the system, the greater the chance that the genie will get stuck in the swamp of complexity. It simply can’t get there from here, not with the current size of context windows & the current level of genie planning & execution.

Small, Safe Steps

Now maybe this is just me being an old fuddy duddy, but:

I have a host of techniques during programming so things don’t get much worse before they get better &

I’d like my genie to use those techniques.

I’m talking about techniques like programmer testing, test first, parallels, tidy first, “make the change easy”, fast tests, separating behavior & structure changes, separating interface & implementation changes.

I can get genies doing a little bit of this stuff sometimes using system prompts. But the genie is astonishingly bad at safe sequencing & willing to abandon it at the first signs of resistance. This is a pity, too, because the genie has the potential to be really good at small, safe steps. As a human I sometimes get side-tracked in the middle of a sequence. My genie wouldn’t.

Training?

Say we start with a tiny little system. Then we introduce a change that makes it a little bigger. Then another. As my friend Keith Adams put it, “There’s much more information in the right triangle of accumulating changes than there is in the final result at the base of the triangle.”

The following is complete speculation on my part. I’ve never trained a large model. But these are what I would try.

The first level of training is to find code bases that have evolved through lots of small, safe changes & train the model on the diffs as well as the code.

The next level is noting that even small, safe diffs are composed (or can/should be composed) of even smaller, safer changes—individual refactorings strung together, individual behavior changes backed by tests. I want my genie to understand & plan for code changes at this level of detail, not “oh I edit this file then I edit that file”. I’m not sure how to train for this. Maybe generate & test & note which sequences of changes are likely to lead to better programs,

The last level that makes sense to me is to run all changes as syntax tree transforms instead of text. I’ve been absolutely astonished how well LLMs do using tokens to predict code, but I don’t see how they’ll ever get really good at making changes without knowing about the structure of code.

As I said, all of the above could be wildly wrong, overtaken by evolution of general genies, or just plain ineffective. But I can dream.

Generate & Test

Another approach is to use refactorings + the transformation priority premise1 to generate a host of successor programs, then only hang on to the promising ones. After a while of doing this, the genie could learn which structure & behavior changes are most likely to result in a “fit” successor.

Justified Confidence

However the next generation of genies is structured or trained, the result I’m looking for is an augmentation that works better—fewer dead ends, less useless code, fewer defects, quicker, cheaper. Perhaps it’s not surprising that I see genies in horseless carriage terms. I just want a genie that codes like I do at my best. And then I want them to be better.

The transformation priority premise is to behavior changes what refactorings are to structure changes. When a test fails, use the lowest-numbered transformation to get it passing. Robert Martin listed them as:

({} → nil): No code at all → Code that returns or employs

nil(nil → constant): Replace

nilwith a constant value(constant → constant+): Replace a simple constant with a more complex constant

(constant → scalar): Replace a constant with a variable or argument

(statement → statements): Add more unconditional statements

(unconditional → if): Introduce a conditional (split the execution path)

(scalar → array): Replace a scalar with an array

(array → container): Replace an array with a more complex container (e.g., list, map)

(statement → tail-recursion): Replace a statement with a tail-recursive structure

(if → while): Replace a conditional with a loop

(statement → non-tail-recursion): Replace a statement with a non-tail-recursive structure

(expression → function): Replace an expression with a function or algorithm

(variable → assignment): Replace the value of a variable (introduce assignment)

(case): Add a case (or else) to an existing switch or if statement

+1 I also thought about this, thanks for explaining it so well. I suspect the challenge might be in finding code bases where quality of transformations valued more than final results. As you mentioned we often sidestep and cut corners which obscures path in retrospective.

Hey Kent, Great article! I'm curious which tools/genies you are using? I've had pretty good success with prompting over training for this kind of directing of foundation models. I believe one could achieve a lot of this by including some general system prompting around the more iterative prompts. e.g. you could almost copy paste this entire article into the Claude.md file (for Claude Code) and the iterative prompts would likely behave more like this.

Thanks for the post. I'm gonna go ask Claude to summarize this article into a system prompt and see what happens. :)