Another raw augmented coding observation. One of my projects is a server-grade implementation of Smalltalk, virtual machine on up. This is not a simple project nor a short one. I wouldn’t have even attempted it without a genie.

I significant limitation I’ve experienced is a complexity cliff the genie runs off when adding features (at the end I’ll propose my response).

Much thanks to today’s sponsor, Augment Code. I use Augment Code daily in my …ahem…augmented coding adventures.

Augment just released an early version of Augment Code: Remote Agent. Take the same sorts of prompts you currently sit & watch but now run them asynchronously. As more of augmented coding becomes routine, this enables even bigger leaps ahead.

Access to Augment Agent is limited for the moment. You’ll be a pioneer, with all that implies. But dang, there’s some wonderful stuff to explore. Sign up here to get on the wait list.

Climbing Feature Hill

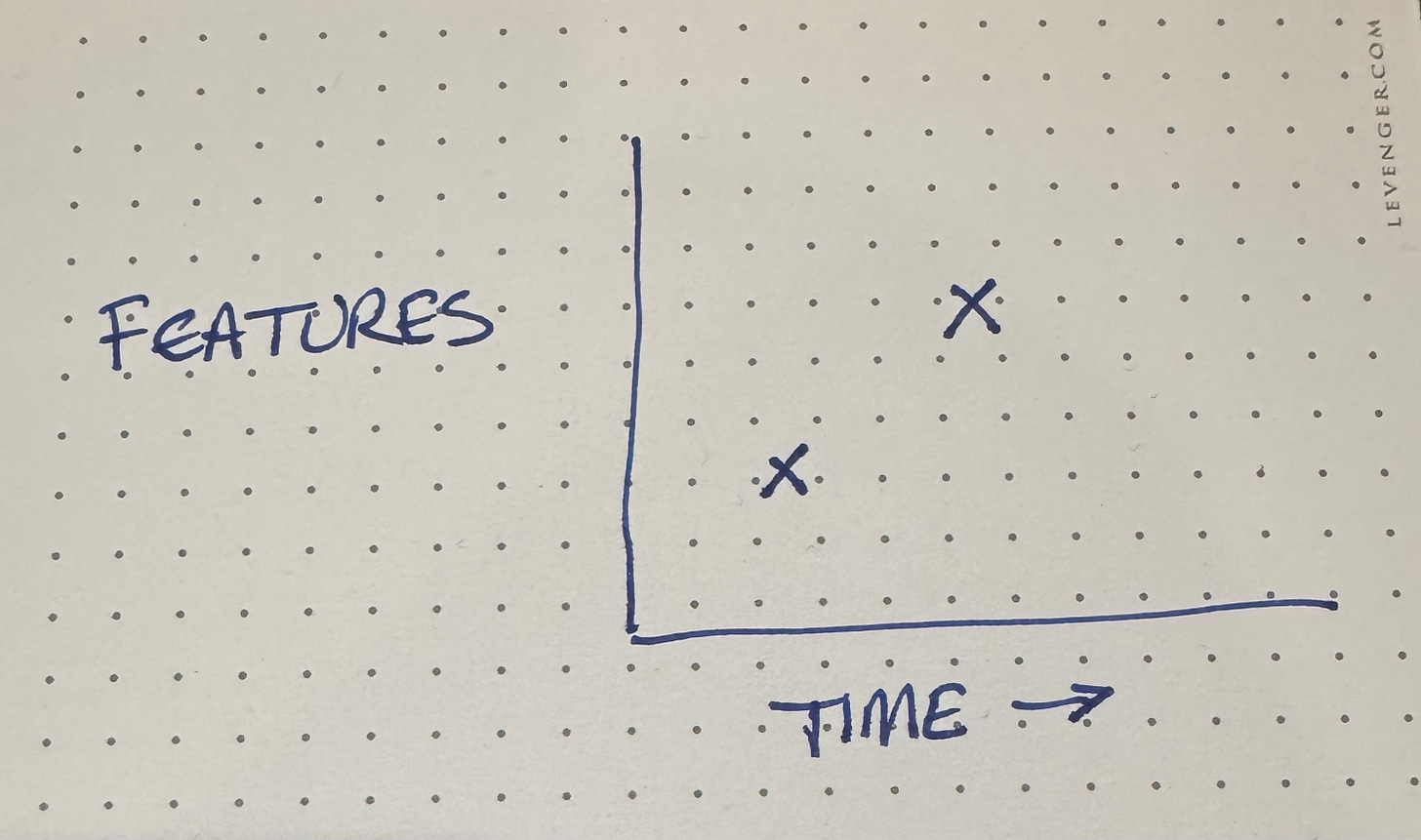

We’ve given the genie a prompt. It will now reward itself if it can plausibly say it has added a feature. How to get there from here?

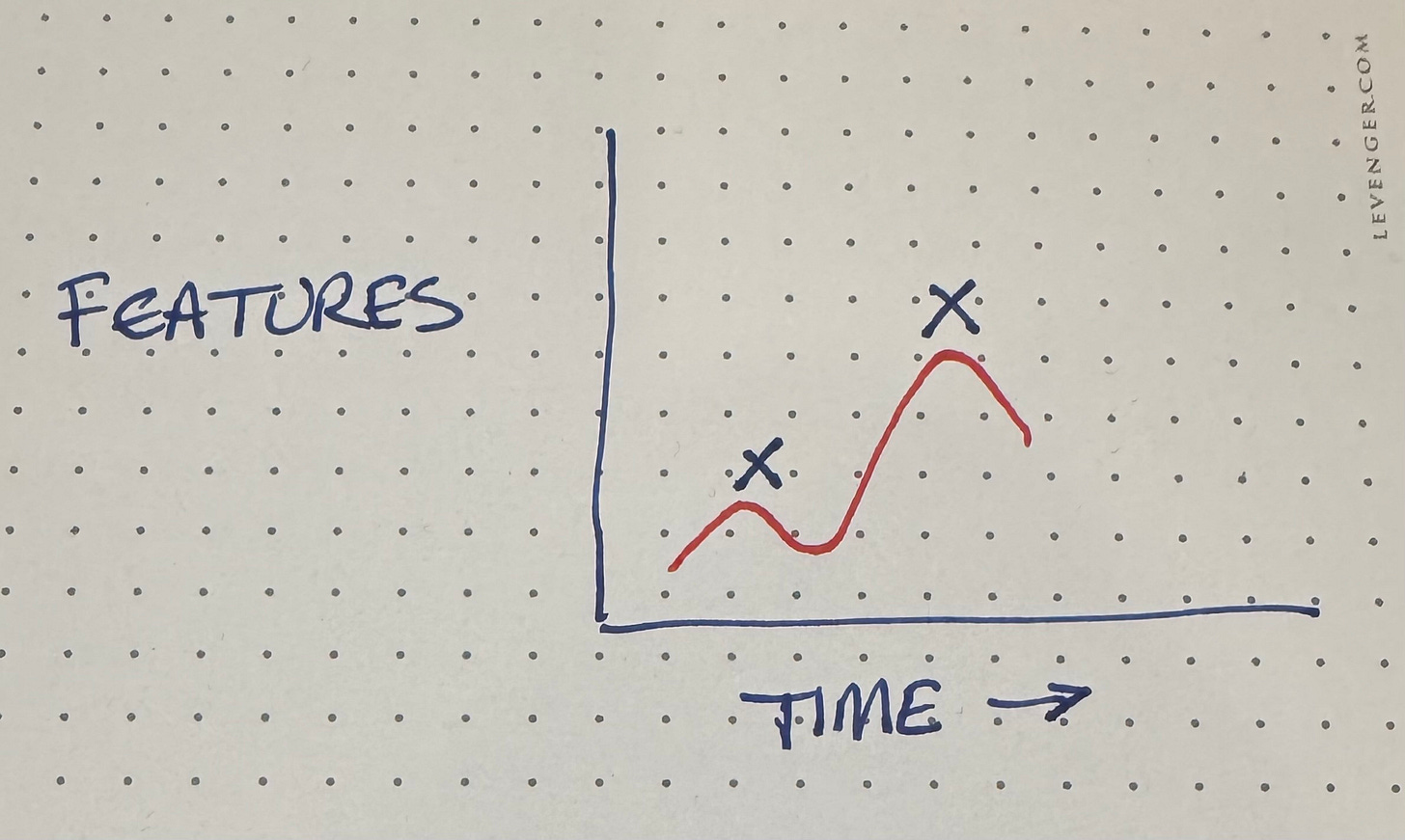

We know that things always get worse before they get better (if you look closely enough). A fuller picture looks like this:

The system is going to get more complicated before it gets more capable. The genie doesn’t mind. With boundless confidence it starts “fixing” one thing. And another. And another. Until it gets to the next higher peak.

Pit of Despair

That’s the best case scenario. In practice, you don’t always know how far down you’re going to have to go before you can start back up. What happens when the complexity gets to be too much?

Now the complexity has gotten to be too much for the genie. There’s no path consisting only of tiny steps getting from here to there.

The genie doesn’t respond well to this situation. I’ve seen infinite loops. I’ve seen truly pernicious behavior like deleting assertions from tests, deleting whole tests, & faking large swathes of implementation. These behaviors may allow the genie to reward itself with a biscuit, but they destroy trust, my trust. And just when I was starting to have fun programming again…

Parallels

One missing technique in the genie’s skill set is parallels. The genie’s first strategy is to just change the thing. For example I had a data structure that took uint64’s as keys. I asked the genie to make a generic version of the data structure that took a type as a parameter. It started changing uint64 to T little by little until the problems this introduced made further progress impossible.

The genie’s second strategy is (and here I’m generalizing from the various tools I’m using) large-scale parallels. We have a Node struct? We’ll make a GenericNode struct. And so on through the whole implementation.

What I observe about this strategy is that the combination of non-determinism & sensitivity to initial conditions means that what the genie comes up with the second time can be radically different from, and sometimes inferior to, the original version.

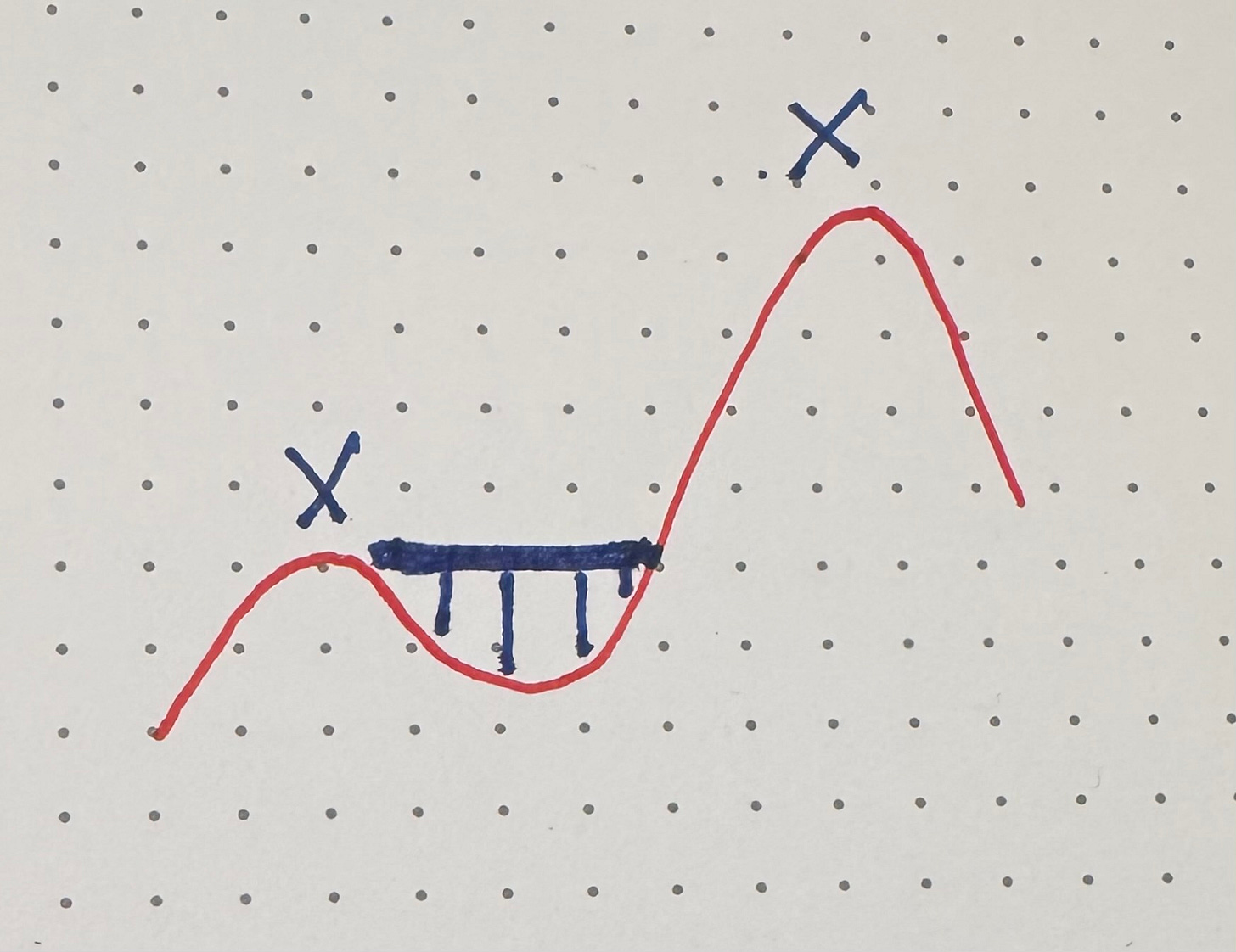

The strategy that seems to be missing is parallels—having the two implementations coexist for a while. We’d start with this:

Node

key uint64

I’d do is add a type parameter to the data structure without using it.

Node<T>

key uint64

Then I’d start testing a version that used uint64 as the type parameter.

node := Node<uint64>

…

Then I’d add a second version of storage for the keys:

Node

key uint64

newKey T

Now everywhere the key gets set, the new key gets set as well:

key = value

newKey = (T) value

(Note that this step is wonky. If I try to use the data structure over strings, this code will break. That’s okay. We’re in a transitional state. The transition will be over soon.)

With this we are guaranteed that key & newKey contain the same value. We start reading from newKey.

…(uint64) newKey…

Note that the code compiles & the tests run at every step (not part of the genie’s strategy—it seems to be incentivized for the big TA-DA! moment).

Now we can stop assigning to key, delete it since it’s no longer used, stop casting the value of newKey, & rename newKey to key.

Node

key T

Now we can create nodes of any type we want, and all without ever being at risk of exceeding our complexity budget & having to abandon our effort.

Bridge

This parallels strategy for design change reduces the risk of changing a bunch of stuff, breaking the system’s behavior, & never getting it back.

At the cost of increased complexity for duration of the change we just don’t have to worry about breaking the system. Not that the genie seems to worry about that. Topic for another day.

In practice many refactorings are: make the change; try to fix the consequences; if that demoralises then rollback and plan an incremental approach. At the moment the genie doesn’t ever seem to be demoralised. Unless you count the “too many failures, aborted”.

I can’t help but think that with the amazing introspection that comes with Smalltalk, what would happen if we let the genie loose “inside” of the Smalltalk IDE.

Think of the Squeak/Pharo Finder Browser in Example mode and the genie driving to find the best/shortest path to pass the tests. So instead of just a one method answer you‘d get multiple method “riffs” to get there. Brute force wouldn’t get you there, but with the time, insight, and learning ability of the genie, that might be promising.