I’m a geek speaking to you, a technology-savvy executive, about why we are doing things in a more complicated way than seems necessary. You may have heard the word “bi-temporal”. What’s that about?

In a nutshell, we want what’s recorded in the system to match the real world. We know this is impossible (delays, mistakes, changes) but are getting as close as we can. The promise is that if what’s in the system matches the real world as closely as possible, costs go down, customer satisfaction goes up, & we are able to scale further faster.

Here’s how it works.

Scenarios

We’ll take addresses as our example. Addresses are useful for sending correspondence, calculating taxes, determining regulations, & targeting marketing. Addresses, though, change.

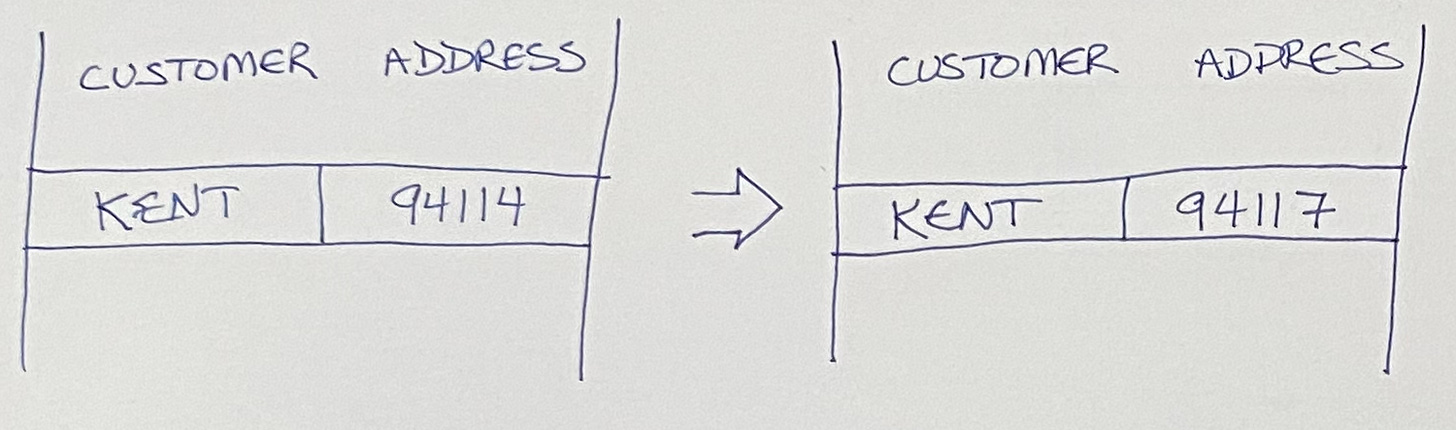

Simplest—we’ll just store the address in the database. When the address changes, we’ll change what’s in the database. Finito.

Not quite. The customer calls and says, “Why did you charge me California sales tax for this order? I don’t live in California. 🤬” We look in the database & there it is, a California address. “I just moved you numbskulls. The order was sent to me when I was in Colorado.”

We’re terribly sorry for the mistake. Here’s a voucher for an ice cream cone.

Not a good experience for the customer. Not a good experience for us.

Dated data—we choose to remember the history of all the addresses & tag them with their date. This is more complicated. Now the customer service screens have to display a list of addresses instead of just one. The database will be bigger because we don’t throw addresses away. The code will be more complicated because we can no longer say, “Here’s a customer. What’s their address?” we have to say, “Here’s a customer. What’s their address on this date?”

Yes, it’s more complicated, but in return we get to answer our irate customer’s question. “Our records show that you placed the order on June 15 & you moved on June 1.” Oh.

Okay, so date-tagged data is better for us at the cost of a bit more complexity.

However… What happens when a customer says, “Oh, by the way, I moved 2 months ago.”? What date do we use for the tag? Today? Then we won’t know that we need to recalculate 2 months’ worth of statements. Two months ago? Then we won’t be able to explain (to the customer, tax authorities, whoever) why we did what we did.

The fundamental, inescapable problem? What is in the system is a flawed reflection of what is going on in reality. We want what is in the system to be as close as possible to reality, but we also need to acknowledge that consistency between the system & reality will only ever be approached, not achieved. The system will record changes in reality eventually, but by then we may have made decisions that need to be undone.

Sound difficult? Only a little more than what we’ve done already.

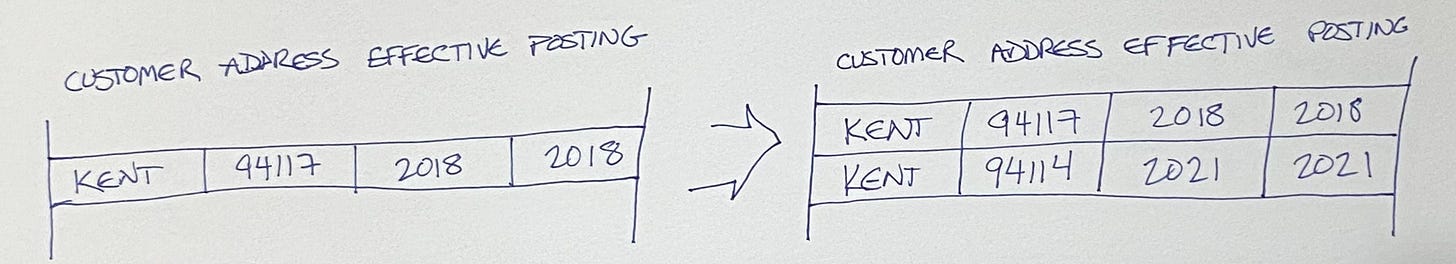

Double-dated data—we tag each bit of business data with 2 dates:

The date on which the data changed out in the real world, the effective date.

The date on which the system found out about the change, the posting date.

This is the simple case where effective & posting dates match.

(These 2 dates are why we call such systems “bi-temporal”. We have 2 timelines. One is the timeline of when things actually happened, the other the timeline of when we found out about it. The purpose of the 2 dates is to make sure that our system is eventually consistent with reality.)

Another way to look at the example above is to show the timelines explicitly.

And Now…

Using effective & posting dates together we can record all the strange twists & turns of feeding data into a system.

“I forgot to tell you that I moved last year.”

“You got last year’s address change wrong.”

“I’m going to move next year.”

And this is the magic one, the nightmare scenario that just can’t be automatically handled otherwise.

“You got last year’s address change wrong. I actually moved 2 years ago.”

To accurately process this scenario we need to undo 2 years’ worth of processing, redo those years with the correct address, the continue from there. Without both dates we’re throwing the corrections into an account called “Manual Corrections” & praying those corrections won’t cause problems in the future (praying in vain, as it turns out).

Conclusion

The goal of our design is to provide eventual business consistency, for what’s recorded in the system to match what’s happening in the real world as well as possible at a reasonable cost. Since perfect consistency is impossible:

We save everything we learn.

We track when changes occurred in reality.

We track when we found out about those changes in the system.

We invest additional programming complexity so we can:

Reduce costs (fewer complaints, faster resolution).

Reduce mistakes.

Improve compliance.

That’s the tradeoff. That’s what we’re doing we say we are bi-temporal. GeekSpeak for “better business”.

I’d like to thank Massimo Arnoldi & the Lifeware gang for teaching me bi-temporality, teaching me how to effectively visualize the two timelines, & using bi-temporality to provide outstanding customer service to millions of insurance customers for decades.

Appendix: The Analogy

Bi-temporal data has been around since the early 1990’s, based on the pioneering work of Richard Snodgrass. Part of the reason it hasn’t taken off is because of the additional complexity it imposes on programmers. However, I think part of the reason it hasn’t become more popular, given the benefits it brings, is just the name. Hence my proposed rebranding to “eventual business consistency”.

Unfortunately, that name is itself a geeky analogy. If you already understand “eventual consistency”, the analogy makes immediate sense. If not, probably not so much. At the risk of “explaining the joke” (which never works, right?), here is the analogy.

Say we have a critically important database. We store the data on 2 machines so if one machine crashes we still have access to our data.

Say the network between the 2 machines is flaky (protip: it is). When we write data to one machine & the network is down we can either:

Wait for the network to come back, which imposes unpredictable delays. If the data absolutely, positively have to be consistent between the 2 machines we may be willing to pay this cost.

Write the data to one machine now & catch up later. If we absolutely, positively need to be able to write data at all times but it’s okay if the data is a little out of sync, this is an acceptable tradeoff. We call this scenario “eventual consistency”.

It’s this latter, “catch up later”, strategy that we draw our analogy from. Just as the 2 databases are consistent, but only eventually, our system data matches reality, but only eventually. We acknowledge that the system & reality are a little out of whack but we’re transparent about inconsistencies & fix them as well as possible as soon as possible.

I began reading your post and realized "hey -- I was just scribbling about that myself" and finally remembered that the context was.

The combination of immutable data (with an inherent timestamp) and the idea of an "effective date" is pretty damned powerful ... and much more representative of reality than the good ol' "just keep a snapshot, bytes are EXPENSIVE" days of dozens and dozens of megabytes in a refrigerator-sized behemoth!!!!

Hi Kent, thanks this article, love the principles you take us through to build up the argument that some complexity can be necessary. Like a rocket where every kilo of fuel is a tradeoff against the weight of it, we're faced with adding just enough complexity to solve the problem but not more. And the messy real-life complexity you describe here definitely resonates!

Reading the article, the words "event sourcing" kept ringing in my ears, and I think you're maybe not using those words because you're purposefully describing the problem from a business-point-of-view? Including the solution you propose, which fits into that same business-context, which makes your article very clear and understandable. But I wonder if you'd agree that event sourcing is one implementation that can achieve Eventual Business Consistency?

I say this, because an event would model the backdateable date and also inherently have timestamps showing when it was received, and so it naturally expresses the two timelines. And in an event sourced system, the data that needs to be reprocessed would also be available as events, which increases the likelihood of having all the necessary data for reprocessing, compared to only having access to the current state of normalized data.

More generally, I've been drawn to event sourcing architectures precisely because they reflect a fundamental reality of the world where messy, error-prone, back-filled corrections are necessary to keep everything in sync. Reality is dominated by events more than state, if I can get away with such philosophizing, which is how I feel it connects so well to what you describe.

I'd love to hear your thoughts on this, but above all else thank you for sharing the article.